As regular readers will know, I’ve been a little critical of Google of late when it comes to their disclosure of vulnerabilities in other people’s software.

As regular readers will know, I’ve been a little critical of Google of late when it comes to their disclosure of vulnerabilities in other people’s software.

In the past few weeks, Google has not only disclosed publicly a number of zero-day vulnerabilities in Microsoft Windows and OS X, but has also released proof-of-concept code demonstrating how to exploit them.

The obvious danger is that malicious hackers could use these disclosures as a blueprint for attacks.

To make things worse, in some cases Google’s security researchers knew that the bugs were already scheduled to be patched within a matter of days – and yet chose to tell the world how clever they were anyway, and potentially put many users at risk.

This isn’t new behaviour by Google’s Project Zero security researchers. Way back in 2010, Google’s Tavis Ormandy publicly released details of a zero-day vulnerability in Microsoft’s code, only for online criminals to exploit it to spread a Trojan horse days later.

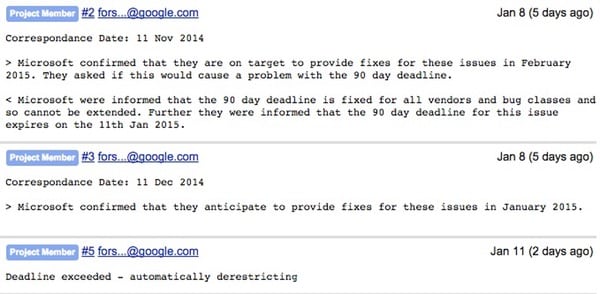

What’s different is that Google announced last year that it would only be giving software vendors 90 days to fix their bugs – after which, the technology giant felt, it was acceptable to make them public for the world to know about.

That is despite, for instance, Microsoft begging Google to hold off releasing details of security holes when patches were not only in the works, but about to be imminently released.

You could argue that that wasn’t terribly friendly behaviour by Google.

Perhaps smarting from the negative reaction from many commentators, Google has now said it is adjusting its policy… a little bit.

We have taken on board some great debate and external feedback around some of the corner cases for disclosure deadlines. We have improved the policy in the following ways:

* Weekends and holidays. If a deadline is due to expire on a weekend or US public holiday, the deadline will be moved to the next normal work day.

* Grace period. We now have a 14-day grace period. If a 90-day deadline will expire but a vendor lets us know before the deadline that a patch is scheduled for release on a specific day within 14 days following the deadline, the public disclosure will be delayed until the availability of the patch. Public disclosure of an unpatched issue now only occurs if a deadline will be significantly missed (2 weeks+).

* Assignment of CVEs. CVEs are an industry standard for uniquely identifying vulnerabilities. To avoid confusion, it’s important that the first public mention of a vulnerability should include a CVE. For vulnerabilities that go past deadline, we’ll ensure that a CVE has been pre-assigned.

As always, we reserve the right to bring deadlines forwards or backwards based on extreme circumstances.

Well, that’s a bit better. And the 14-days grace period should help avoid the scenario of Google announcing software flaws a day or two before Microsoft is due to fix them in a Patch Tuesday update.

But I’m still concerned that Google isn’t acting in the best interest of most internet users with its full disclosure policy and releasing of proof-of-concept code.

Surely to pressure a software vendor into fixing a bug, it doesn’t have to give the mechanisms for exploiting a vulnerability into the hands of those who might exploit them for malicious purposes?

What Google Project Zero team has never satisfactorily explained is what would be so wrong with them privately demonstrating any unpatched vulnerability to a member of the technology press? The media should always be the preferred mechanism for getting a software bug fixed if a vendor is being too slow, rather than putting members of the internet community at risk.

Proof of concept code is absolutely vital to enterprises to validate whether they are vulnerable to a particular exploit. They quite simply do not have the resources to build tools to validate their susceptibility to any vulnerability from scratch. Showing the code to media won't help with that.

Meanwhile, malicious attackers do have the drive and resources to do so, and therefore the release of proof of concept code makes very little difference to them.

So I think the practice is justified.

Wrong.

You don't need exploit code to test a vulnerability. This has been shown over the years and it isn't really hard to comprehend. The recent GHOST (glibc gethostby* functions)[1] disclosure is an example of this. No, it has nothing to do with the corporations; the disclosure (GHOST) actually included the tests. And I'm sorry but POC code of the exploit itself is different. Need another example? GNU bash ('bourne again shell') shellshock code (sure, in this case, due to it simply being arbitrary code execution due to not properly sanitising user input, it could be used but you note that the test did not at all use the exploit per se[2]).

Lastly: you're ignoring (or forgetting or you're unaware of) script kiddies (some of which might be able to do some things which makes it even more problematic, in this way). So no, it isn't just those with the ability. It isn't that simple (nothing is that simple).

[1]The actual test didn't execute any (arbitrary) code on a remote system or actually on the local system. Of course, the functions have been obsolete since ages but that's another issue entirely. Furthermore, the actual exploit didn't necessarily require you to write an exploit which means that there isn't any POC code in the first place.

[2]It showed how to locally test it but you would have to know how to (and not all systems would allow this, I might add) force the system to parse the code as input to the shell (web server might and might not allow this, for example and that isn't considering additional filters the webserver might have). Of course, just like ghost, there already were patches before the disclosure took place. I know this because I had already received the updates when the disclosures took place (okay, technically, for ghost, the patch existed but wasn't applied as such but as I pointed out, those functions aren't used nearly as much as they're for the days when IPv6 was not really used or indeed existed. However, shellshock was already fixed and while there was more than one CVE the serious one was already fixed; indeed, when the disclosure occurred I had applied the fix to my server (and main system) the day prior I think it was).

Before I comment on something else, to explain Graham's meaning of media demonstration, I want to remark on something much more important and significant to me, personally: I appreciate, however, this, and I stress this: you using the term malicious and attacker rather than something that is mostly expected to be termed something else; it isn't easy to do this and it isn't often done because it is what most people know and only know the common meaning of the word – 'hacker'. Thank you for that. It is something my past, present and future appreciates a great deal, more than you (and indeed most) could imagine. A few of my friends relay this message, friends I've had for many many years from same scene, but most don't get it at all (let alone know it exists). So really, this is not at all overkill; it is being very appreciative – too many dismiss appreciation but I do not!

And now the message:

Something else I want to remark on (since I now read your message word for word and while somewhat awake). What Graham means with the media is in order to get the vendor to fix the flaw (but again, this explanation is invalidated when a patch is already in the works – the vendor IS fixing it and therefore NOT ignoring it and therefore the excuse is more like inexcusable[1]), and not to help customers. I'll actually bring up an actual example with Microsoft from years gone. Summary is this though: if a vendor refuses to acknowledge a flaw, a serious flaw, then Graham is suggesting that the researcher(s) demonstrate it to the media, therefore shaming the vendor (instead of releasing an exploit and letting anyone abuse it).

Microsoft is guilty here even. Elaboration: When the cDc (cult of the dead cow), the creators of the back orifice backdoor family, released BO2k (maybe it was the earlier one – it has been too long to recall specifics although I know the cDc still have references to it somewhere on their site) released said family, Microsoft directly dismissed the concerns (on their website) and actually stated that there were no concerns. They got so much wrong it is hard to fathom; that even includes the release date of the backdoor itself! (yes, this means that Microsoft indeed denied it as a risk[2]). What Graham is saying, then, is the other way to approach it would be to demonstrate it to the media, therefore forcing Microsoft to acknowledge it. In that case it seems to me the cDc did just as well since nothing would have – and nothing did in the end – convinced Microsoft otherwise (I do admit I am biased here for certain reasons but that's besides the point). That's the idea, that's the difference and that is where Graham is at. It is a very contentious situation, however, and there are many things to consider – it is indeed a very variable thing. (As for demonstrations, if memory serves me correctly, cDc demonstrated it at DefCon in Las Vegas – yes it is an old convention… makes me feel rather older than I am, I admit, but experience is very useful so I'll admit it).

[1]Which Microsoft was doing. Yes, yes, they did it very slow. But anyone making the claim that the exploit was to force them to fix the flaw before someone with malicious intent finds and exploits it, is lying to others and more importantly, lying to themselves, whether they realise this or not. Whether it is inexcusable to do it or not is debatable but it certainly isn't a valid excuse at this point (how could it be when their claim is already false ?).

[2]Coincidentally, Microsoft no longer has this rebuttal on their site but it still exists other places.

… and I hope this adds up okay… sensing the wake-state transitioning to sleepy state…

I know this doesn't apply to Google but what is an independent researcher supposed to do when they discover a flaw. I recently discovered a flaw in the description of RSA in the reference books which lead to there being at least two decryption keys per encryption key and usually more*. I looked into it further and found that RSA knew about it and had taken measures to deal with it. But say they hadn't how would I go about making sure RSA listened?

*For the mathematicians: the decryption key, d, is encryption key mod lcm(p-1,q-1) which (p-1)(q-1)/(the factors they have in common which means there will be at least two decryption keys < pq if p,q>2 since p and q are prime and therefore odd meaning that p-1 and q-1 are even and therefore they have a common factor of 2 so the LCM will be less than or equal to (p-1)(q-1)/2 and thus there will be at least two numbers mod n equivalent to d.

Correct; it doesn't apply to Google.

However, how you address it is simple: report it to them. If they ignore, try more. Try any number of ways. Going public is the last resort and that is when you're doing it for the good of everyone (whether a 0-day is recommended or not is another issue and I would argue it is a case-by-case decision, not a binary thing). That is the answer to whether RSA listened or not. See also below (although I realise an algorithm deficiency is quite different than a server flaw, the idea still applies):

It used to be commonplace (maybe it still is… I don't really know) for someone to compromise a server and then either patch it for the admin, leaving a message (local mail) … or leaving a message (again, 'mail') on the problems. There's even been worms that have patched systems of holes (and some, maybe even included with fixing, would make it absolutely clear the site was compromised so the admin can be aware of it). One worm that comes to mind is the ramen noodle worm.

So there absolutely are ways to deal with it. Some are more ethical than others, some are 100% legal and some are not… but there are ways, always.

This:

"To make things worse, in some cases Google's security researchers knew that the bugs were already scheduled to be patched within a matter of days – and yet chose to tell the world how clever they were anyway, and potentially put many users at risk."

is the crux of it all. Okay, fine, these corporations shouldn't take as long as they do (I'll be the first to admit it: they take far too long and that is an understatement; however, that is how it is, deal with it or don't use their software – no one is forcing you to!) but they're corporations and doing it on work time (and might have escalations elsewhere). Besides that, it isn't their passion (the latter bit answers a lot of other related things, I might add).

Incidentally, when Google was writing about SSL (when they really mean, I might add, TLS – while most use the terms interchangeably they are not that – while TLS has many flaws, SSL is entirely flawed; that is why TLSv1.2 is the only one considered 'secure' and even that isn't enough: which ciphers, how many (bits in) keys, any exploits found including some that downgrade ciphers (including indeed MiTM attacks)? clients downgrading themselves indirectly or directly? etc.) and how other sites should be only encrypted (which is a fallacy – a site that has a collection of artwork with no customers, no authentication through webserver, etc., the webserver has no need for encryption!) they were writing it on a http (not https) site AND they themselves acknowledged they would be taking some time to migrate over to SSL (see above).

"That is despite, for instance, Microsoft begging Microsoft to hold off releasing details of security holes…"

Typo error? I think Microsoft begged Google.

Ahh. Yes. Well spotted!